Synesthetic Composition

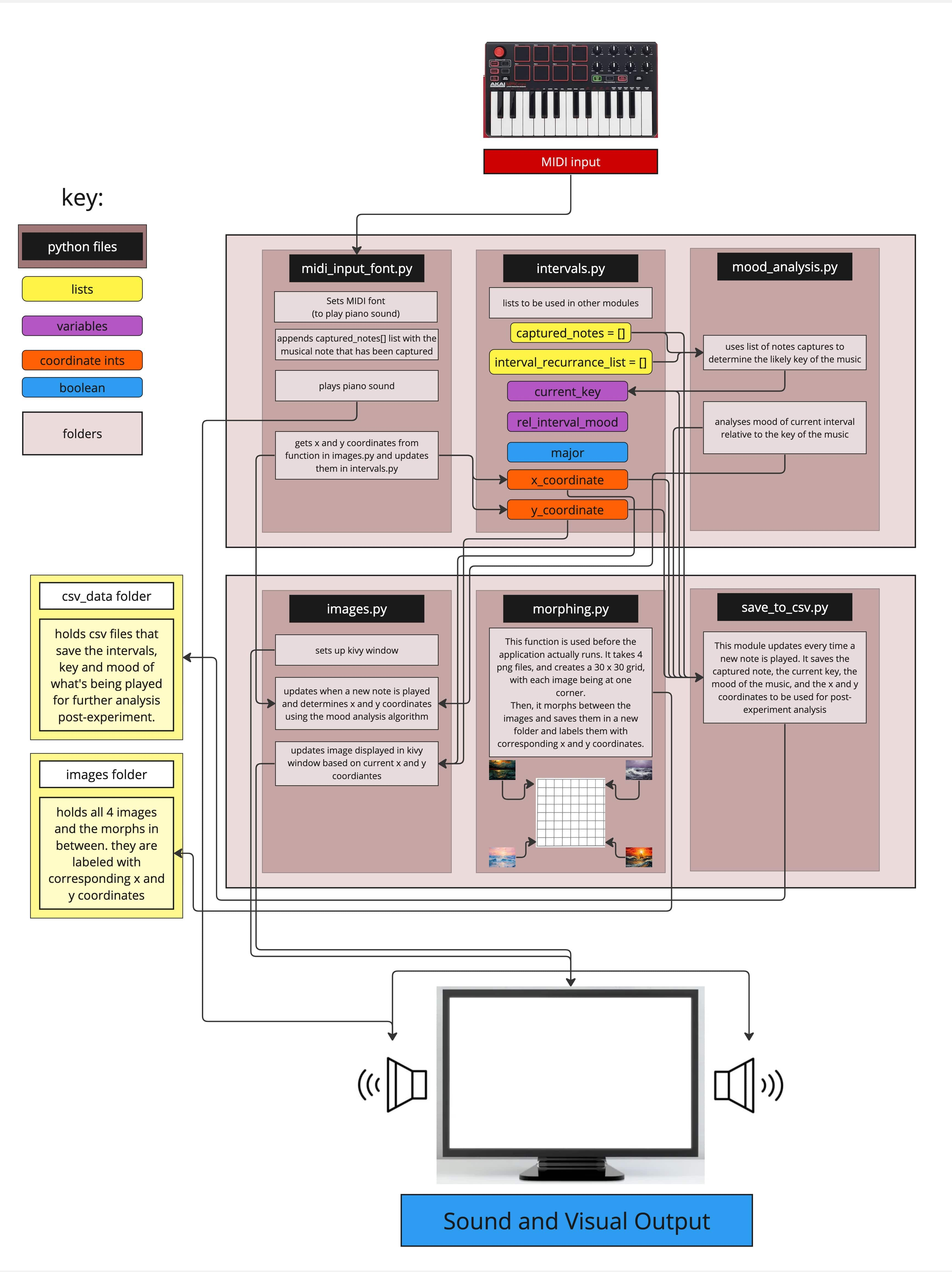

My master's dissertation involved the development of the Synesthetic Composition application (SynComp App), a Python-based tool designed to create a synesthetic experience by linking musical input with dynamic visual output.

Development Environment

Core Libraries

Functionality

MIDI and Audio Integration:

The SynComp App utilizes the 'mido' library to receive MIDI signals and 'fluidsynth' to play corresponding piano sounds, enabling real-time interaction with the MIDI keyboard.

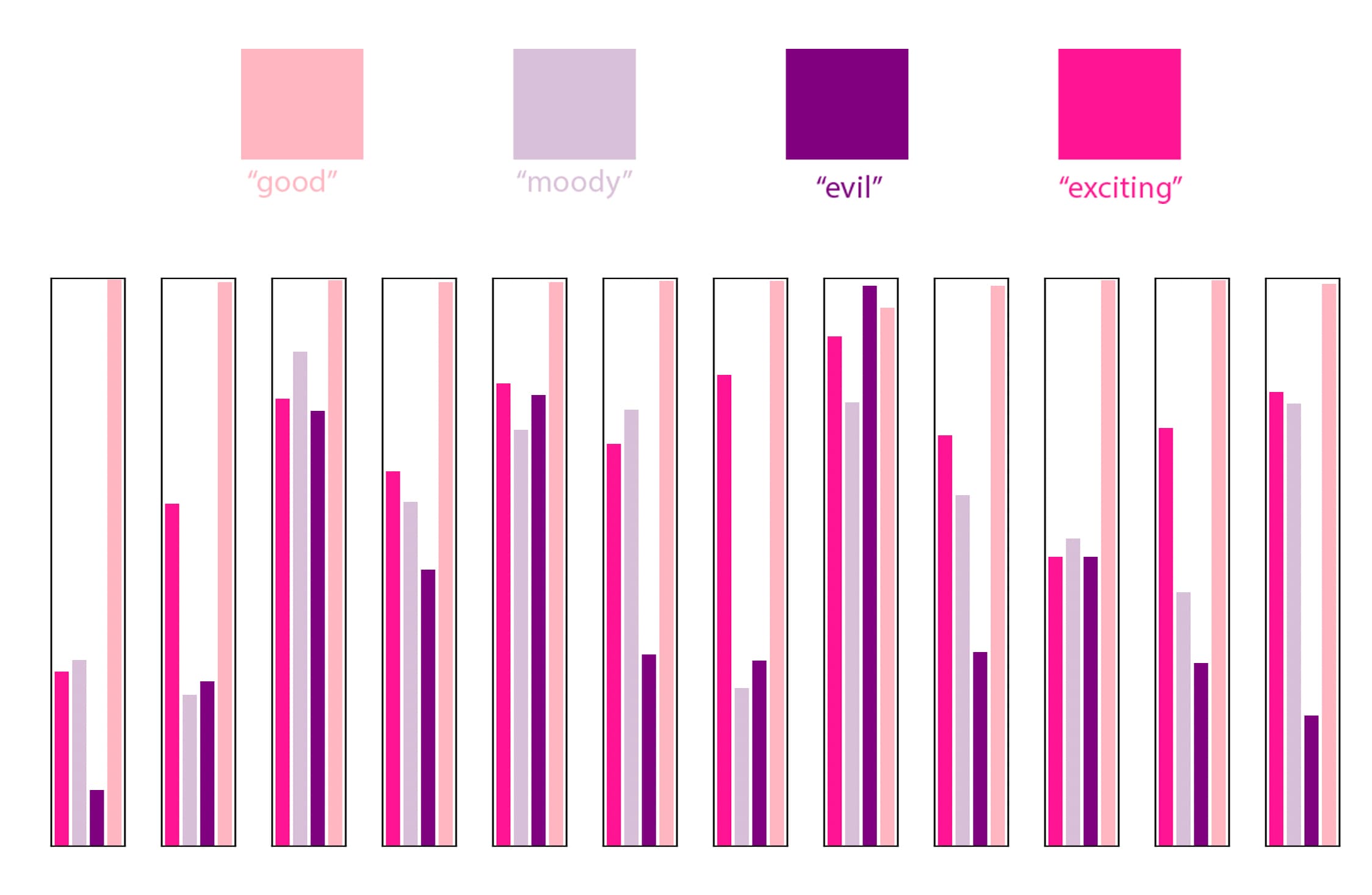

Key and Mood Analysis:

The application analyzes the key and mood of the music by examining the frequency and type of intervals played. It uses this data to adjust the visual representation in real-time.

Visual Output:

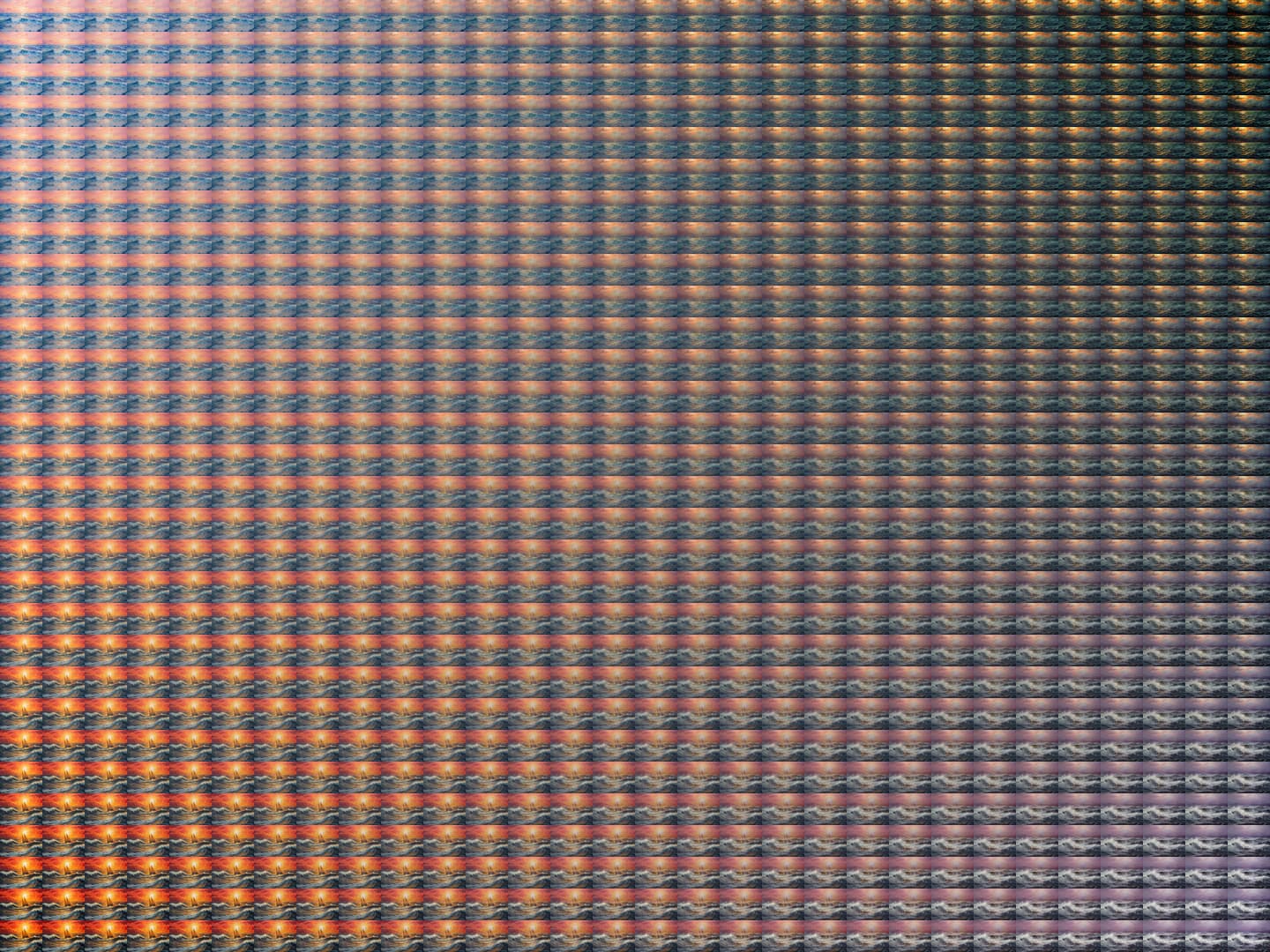

The app utilizes a 30x30 grid to represent mood states, transitioning through four distinct impressionist seascapes based on the music's mood. This transformation is achieved through incremental image morphing.

CSV Data Logging:

In addition to generating real-time visuals, the application saves CSV files containing the intervals played. This data can be used for further analysis and is aligned with other collected participant data to evaluate patterns in music perception and mood.

Application Structure

This graphic gives a visual understanding of how the app is structured and how the modules interact with each other.